XOR

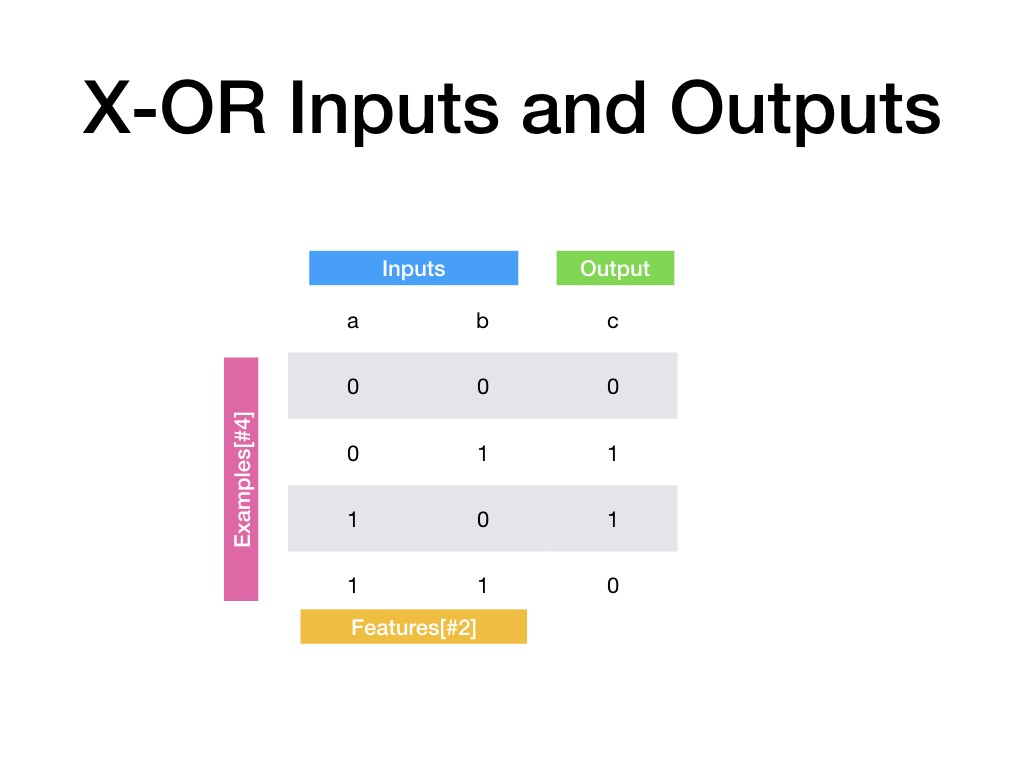

This example consists in using a multilayer perceptron to solve the XOR problem. To understand this example, let's look at this diagram for a moment.

First of all, it is necessary to create the data we need to train the neural network.

As we can see, the matrices correspond to the inputs and outputs of the problem to solve.

We need also to create the test MAtrix for validation result.

The data configuration is finished, we can now create our neural network!

Now that our network is declared, we can also create the different activation functions, and the loss function that we will use to solve this problem.

In order to build the structure of our network, we must create the different layers of it:

The first layer has two inputs (0 and 1 which represents a sample of the previously created data set), and 5 outputs. The last layer has 5 inputs that correspond to the output of layer 1, and 1 output that represents the value we want to predict.

Now let's add the layers to the networks

All we have to do is to train the network, specifying which loss function we want to use and check its operation.

Here, we pass to the "fit" function the input data set, the result set, the number of epochs, the learning rate, and the batch size. The dataset being minimal, we set the batch size to 1.

We want to test our network on the test data set created earlier. So we can write :

Now you can compile and observe your results!

MNIST Dense

We will now turn to a slightly more complicated problem: Solving the MNIST. The mnist database, is a set of data representing black and white numbers written by hand. It is a very well known dataset in the machine learning world because there has been a lot of research on it. Indeed, banks use this kind of algorithms to find automatically which numbers you have entered on your bank draft! It's better that the algorithm knows the difference between a 1 and a 9 you understand why ;) We will use the same neural structure in full connected as for the XOR example. By changing of course our input and the number of hidden layer.

First, you need to import the MNIST data. Fortunately, Neural has a function that allows you to quickly import and manipulate the MNIST dataset.

We import 54000 samples for the training, and we want to check the network on 30 samples. We must now create our neural network. This problem consumes more resources than the XOR problem, so we can use multiple threads like this:

A MNIST image is 28 px*28px (in black and white), so we have to assign 28*28 neurons for the first layer. The last layer represents the list of values that the network will predict. We want to predict a number between 0 and 9, so we have 10 neurons that correspond to the probability of the match with the input set.

We can now train our network:

MNIST Conv

The MNIST problem can also be solved with a convolution network.

Import the data :

Create the network and assign thread

Create activation and loss function

Create the dimension of filter & convolution

Create the different layer

Assign Layers to network

Call fit function and predict with data samples