Table of Contents

Core

Loss

Loss functions are used to determine the error (aka “the loss”) between the output of our algorithms and the given target value. In layman’s terms, the loss function expresses how far off the mark our computed output is.

Mean Squared error

Measures the average of the squares of the errors—that is, the average squared difference between the estimated values and the actual value. MSE is a risk function, corresponding to the expected value of the squared error loss.

Cross Entropy

Cross-entropy is a measure from the field of information theory, building upon entropy and generally calculating the difference between two probability distributions

Activation

An Activation function is use to get the output of node. It is also known as Transfer Function.

- See also

- Neural::Activation

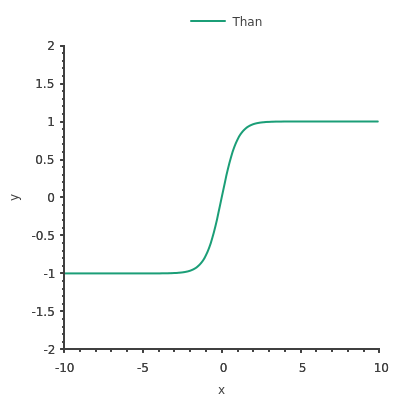

Than

This function is defined as the ratio between the hyperbolic sine and the cosine functions

- See also

- Neural::Than

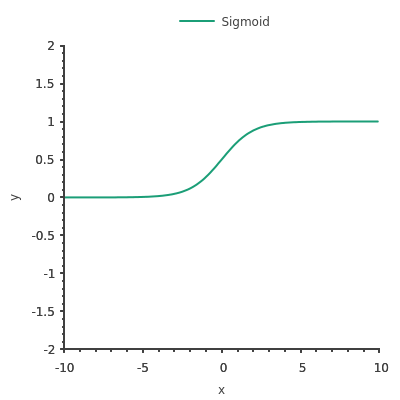

Sigmoid

The following sigmoid activation function converts the weighted sum to a value between 0 and 1.

- See also

- Neural::Sigmoid

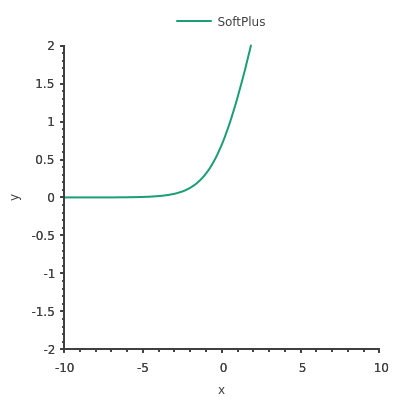

SoftPlus

Soft Plus function

- See also

- Neural::SoftPlus

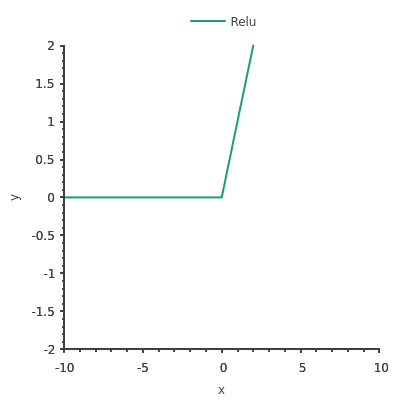

ReLU

This function allows us to perform a filter on our data. It lets the positive values (x > 0) pass in the following layers of the neural network. It is used almost everywhere but not in the final layer, it is used in the intermediate layers.

- See also

- Neural::Relu

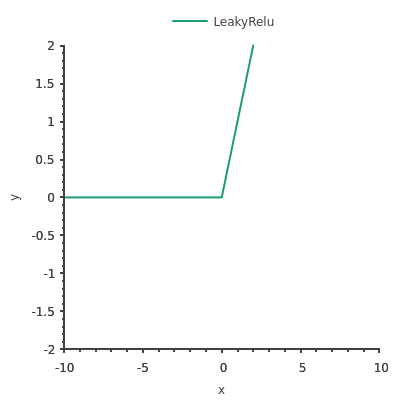

LeakyReLU

Leaky ReLUs allow a small, positive gradient when the unit is not active

- See also

- Neural::LeakyRelu

Layers

Activation

- See also

- Neural::Activation_layer

Full Connected

- See also

- Neural::Fc_Layer

Convolution

Apply a Convolution on the inputs. The Convolution layer takes a tuple of 3 integers for the dimensions of the input and the kernel, and an integer for the stride and padding.

- See also

- Neural::Conv_Layer

Flatten

- See also

- Neural::Flatten_Layer